Difference Bit Byte: A Comprehensive Guide

Understanding the difference between bits and bytes is crucial in the realm of digital computing. Whether you’re a tech enthusiast or a professional in the field, grasping the nuances of these fundamental units of data is essential. In this article, we will delve into the details of difference bit byte, exploring their characteristics, applications, and significance in various computing scenarios.

What is a Bit?

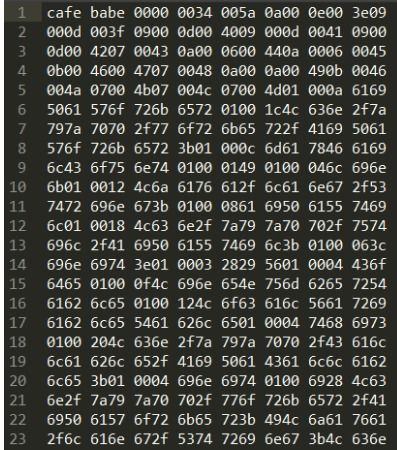

A bit, short for binary digit, is the smallest unit of information in computing. It can represent either a 0 or a 1, which are the two fundamental values in binary notation. Bits are the building blocks of all digital data, and they are used to store and transmit information in computers and other digital devices.

What is a Byte?

A byte is a unit of digital information that consists of eight bits. It is the standard unit of storage in most computer systems. Bytes are used to represent characters, numbers, and other types of data. In the ASCII character encoding, a byte is sufficient to represent a single character, while in Unicode, a byte may not be enough to represent a character, which is why larger units like 16-bit or 32-bit are used.

Difference between Bit and Byte

Now that we have a basic understanding of bits and bytes, let’s explore the differences between them:

| Aspect | Bit | Byte |

|---|---|---|

| Size | 1 bit | 8 bits |

| Representation | 0 or 1 | Combination of 8 bits |

| Usage | Basic unit of information | Standard unit of storage |

As you can see from the table, a byte is eight times larger than a bit. This means that a byte can represent a wider range of values compared to a bit. For example, a byte can represent values from 0 to 255, while a bit can only represent 0 or 1.

Applications of Bits and Bytes

Bits and bytes are used in various applications across different industries. Here are some examples:

-

Computing: Bits and bytes are used to store and process data in computers. They are essential for tasks such as file storage, memory management, and data transmission.

-

Networking: Bits and bytes are used to transmit data over networks. They enable devices to communicate and exchange information efficiently.

-

Graphics: Bits and bytes are used to represent images and videos. They determine the color depth and resolution of visual content.

-

Audio: Bits and bytes are used to store and play audio files. They determine the quality and size of the audio data.

Significance in Computing

The significance of bits and bytes in computing cannot be overstated. Here are a few reasons why they are crucial:

-

Efficiency: Bits and bytes allow computers to process and store data efficiently. They enable the execution of complex algorithms and the execution of tasks in a timely manner.

-

Scalability: Bits and bytes provide a scalable foundation for computing systems. As technology advances, the demand for more storage and processing power increases, and bits and bytes enable this scalability.

-

Standardization: Bits and bytes have become industry standards, ensuring compatibility and interoperability between different systems and devices.

Conclusion

In conclusion, the difference between bits and bytes is a fundamental concept in computing. Understanding their characteristics, applications, and significance is essential for anyone involved in the field. By grasping the nuances of these units of data, you can better appreciate the complexities of digital computing and its impact on various aspects of our lives.