Understanding Bit AI: A Comprehensive Guide

Bit AI, a term that has gained significant traction in recent years, refers to the utilization of bits in artificial intelligence systems. Bits, as the smallest unit of information in computing, play a crucial role in shaping the capabilities and efficiency of AI models. In this article, we delve into the intricacies of bit AI, exploring its various dimensions and applications.

Basics of Bits and AI

Before we dive into the specifics of bit AI, it’s essential to understand the basics of bits and their relevance in AI. A bit, as mentioned earlier, is the smallest unit of information in computing, representing either a 0 or a 1. In the context of AI, bits are used to encode and process data, enabling machines to learn from and make decisions based on vast amounts of information.

AI models, particularly deep learning models, require a significant amount of data to learn effectively. This data is often represented in the form of bits, with each bit contributing to the overall understanding of the model. By optimizing the use of bits, AI systems can achieve higher efficiency and accuracy.

Bit AI in Practice

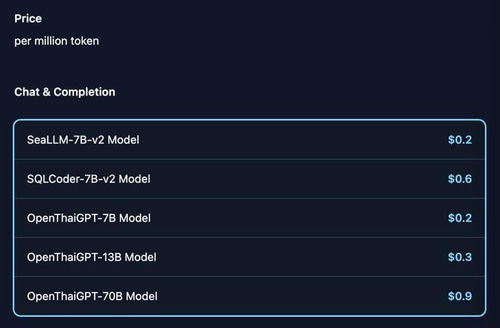

One of the primary applications of bit AI is in model quantization. Model quantization involves converting the floating-point numbers used in AI models into lower-precision integers, such as 8-bit or 16-bit integers. This process reduces the size of the model, making it more efficient and easier to deploy on resource-constrained devices like smartphones and embedded systems.

Quantization can be performed using various techniques, including post-training quantization (PTQ) and quantization-aware training (QAT). PTQ involves converting the model to a lower-precision format after training, while QAT involves training the model with lower-precision weights and activations. Both techniques aim to minimize the loss of accuracy while reducing the model size.

Table 1: Comparison of Quantization Techniques

| Technique | Post-Training Quantization (PTQ) | Quantization-Aware Training (QAT) |

|---|---|---|

| Accuracy | Lower accuracy compared to full precision | Higher accuracy compared to PTQ |

| Model Size | Smaller model size compared to full precision | Smaller model size compared to full precision |

| Computational Efficiency | Higher computational efficiency compared to full precision | Higher computational efficiency compared to full precision |

Bit AI and Energy Efficiency

Energy efficiency is a critical concern in AI, especially as models become larger and more complex. Bit AI plays a crucial role in addressing this challenge by reducing the computational requirements of AI models. By using lower-precision representations, AI systems can achieve higher energy efficiency, making them more sustainable and environmentally friendly.

One notable example is the BiLLM (Binary Large Language Model) developed by a team from the University of Hong Kong and ETH Zurich. The BiLLM uses a novel training post-quantization (TPQ) method to compress the parameters of large language models to as low as 1.1 bits. This approach not only reduces the model size but also maintains high accuracy, making it an attractive solution for energy-efficient AI applications.

Bit AI and Real-World Applications

Bit AI has a wide range of real-world applications, from mobile devices to autonomous vehicles. In mobile devices, bit AI enables AI models to run efficiently on limited hardware resources, improving battery life and overall performance. In autonomous vehicles, bit AI helps reduce the computational load, enabling real-time decision-making and enhancing safety.

One prominent example is the Mistral 7B AI model, which was introduced by the French startup Mistral. The Mistral 7B model is designed to be highly efficient and compact, making it suitable for deployment on a single GPU. To achieve this, the model was quantized using 4-bit precision, significantly reducing its size and computational requirements.

Conclusion

Bit AI is a powerful tool that can revolutionize the field of artificial intelligence. By optimizing the use of bits, AI systems can achieve higher efficiency, accuracy, and energy efficiency. As AI continues to evolve, the role of bit AI will only become more significant, enabling new applications and advancements in